We often hear that pain is an unpleasant sensory and emotional experience which is highly personal and subjective. However, pain also happens in a social context and we are beginning to understand more about how we communicate pain to others. As well as talking about pain we also know that pain can be communicated to others through nonverbal signals (see Joe Walsh’s blog post ‘Pain communication through body posture’). This is important because some people may not be able to verbalise whether they are in pain, a prime example being infants.

One key channel of communication is through facial expressions which can encode both the sensory (e.g. the feeling of hurt and suffering) as well as the emotional quality (e.g. the feeling of aversiveness and distress) of pain to others. Such signals are broadcast into the social world and can elicit helping behaviours as well as alert us about potential environmental harm when we witness facial expressions of others. To do this effectively we need to be able to clearly present how we feel in order to enable accurate identification and interpretation by others.

Researchers have examined how well we can identify facial expressions of pain, whether we can discriminate them from core emotions, as well as whether we can differentiate between genuine and posed pain. One of the dominant methods to achieve this has been to look at specific facial muscles using a method called the Facial Action Coding System (FACS; Ekman et al., 2002). Whilst an extremely informative strategy it is different from how we process a face or facial expressions in a natural or more holistic way. Fortunately there are other ways of looking at expression recognition and different types of perceptual information that we can use to see how we process and recognise expressions, including pain.

One alternative approach is to consider the face as another type of visual stimulus that we encounter in a social environment. If we take a visual perception perspective we can think of the face as an object that contains different types of information which is used in perception. One example is spatial frequency (SF) information, in that when we break down the features of an object we find it comprises of both coarse (low SF; e.g. the contour and structure of a face) and fine (high-SF; e.g. local features and creases) details.

In a clear intact face representation (i.e. broad-SF), both low- and high-SF information is available. However, we can also look at these different types of information in isolation to see whether they convey similar or different signals in the recognition of facial expressions. While there are suggestions that these signals are relevant for emotion perception in faces few studies do this in the context of pain expressions. Therefore our study (Wang et al., 2015) investigated the role of spatial frequency information in the identification and discrimination of facial expressions of pain and in comparison to core emotions. In this study we used a standardised set of facial expression images of pain and core emotions (Roy et al., 2007) and created three different versions: one set consisted of intact (broad-SF) images, another was a set of low-SF stimuli (i.e. just coarse information), and a final set of high-SF stimuli (i.e. just fine details). For an example of a SF filtered face image, please refer to Figure 1 in Vuilleumier et al.’s study (2003).

We recruited 64 healthy participants to complete two tasks: an identification task and a discrimination task. In the identification task we asked participants to view different faces and identify which expression was being presented (e.g. pain, neutral, anger, disgust, fear, happiness, sadness, or surprise). In the discrimination task participants were presented with a face and asked to decide which of two expressions it could be (e.g. pain or fear, pain or happiness, fear or happiness). We found that both low-SF and high-SF information alone can be used in the identification and discrimination of pain and core emotions. Interestingly, a low-SF advantage was found for pain faces. Here we found that when identifying pain and discriminating pain from happiness the low-SF condition resulted in better task performance than when presented with just high-SF information. This advantage for coarse information was not found for most of the other expressions we examined (in fact, we only also found this for disgust expressions).

So what does this tell us about the perception of pain in others? The fact we found a perceptual advantage for pain (and disgust) faces when presented at low-SF suggests we can still detect these pain-related cues in degraded visual conditions. In a natural environment facial displays will be degraded if viewed from a distance or in peripheral vision, in which case the detection of fine details may be reduced. This suggests that in challenging visual conditions we can still perceive the overall affective quality of someone else’s pain and do not have to always rely on an analysis of detailed facial features. Research also suggests that there are different neural pathways that process coarse and fine detailed information (Vuilleumier et al., 2003) and so it would be interesting to consider whether different mechanisms play a role in the perception of other’s facial expressions of pain.

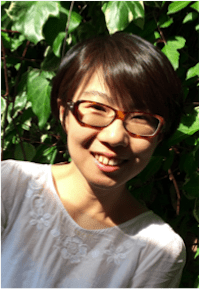

About Shan Wang

Shan is a final year PhD student based at the Centre for Pain Research, University of Bath. She works under the supervision of Dr Ed Keogh and Prof Chris Eccleston on research of recognition of facial expressions of pain, and the role of spatial frequency information. Shan completed her BSc & MSc in psychology before starting her PhD.

Shan is a final year PhD student based at the Centre for Pain Research, University of Bath. She works under the supervision of Dr Ed Keogh and Prof Chris Eccleston on research of recognition of facial expressions of pain, and the role of spatial frequency information. Shan completed her BSc & MSc in psychology before starting her PhD.

References:

Ekman, P., Friesen, W. V, & Hager, J. C. (2002). The Facial Action Coding System. Salt Lake City: A Human Face.

Roy, S., Roy, C., Fortin, I., Ethier-Majcher, C., Belin, P., & Gosselin, F. (2007). A dynamic facial expression database. Journal of Vision, 7(9), 944. doi:10.1167/7.9.944

Vuilleumier, P., Armony, J. L., Driver, J., & Dolan, R. J. (2003). Distinct spatial frequency sensitivities for processing faces and emotional expressions. Nature Neuroscience, 6(6), 624–631. doi:10.1038/nn1057

Wang, S., Eccleston, C., & Keogh, E. (2015). The role of spatial frequency information in the recognition of facial expressions of pain. Pain. doi:10.1097/j.pain.0000000000000226